The generative AI chatbot landscape is becoming an increasingly crowded space. While OpenAI’s ChatGPT may have been the first AI chatbot to gain wide usage and notoriety, there are now a wide range of options available to those looking to experiment with AI-powered chatbots.

The generative AI chatbot landscape is becoming an increasingly crowded space. While OpenAI’s ChatGPT may have been the first AI chatbot to gain wide usage and notoriety, there are now a wide range of options available to those looking to experiment with AI-powered chatbots.

Many of these competitor tools offer some distinctive advantages over ChatGPT as well: As previously discussed in this newsletter, Google Gemini (formerly known as Bard) can provide users with links and citations, allowing them to verify the chatbot’s generations. This can help users more accurately catch hallucinations, or false statements presented as facts by a generative AI tool.

While there are a wide range of use cases for generative AI chatbots, one of the most interesting is their application on social media platforms. The most popular social media platforms already have active userbases, as well as massive achieves of text and imagery to train large language models. With that in mind, it’s unsurprising that Meta and X (formerly known as Twitter) have both launched their own generative AI chatbots, both powered by their own proprietary large language models.

Interestingly, though, both Meta AI and X have taken unique approaches to generative AI chatbots that suggest two different models for how AI chatbots could be integrated with existing social media platforms in the future. These two models are particularly important for marketers, who can leverage both of these platforms to build strong connections with customers.

With that in mind, this instalment of our newsletter will compare Meta and X’s approaches to social AI chatbots and consider some of the ways that AI tools might change how marketers connect with audiences on these platforms.

Meta AI

Meta’s recently launched Meta AI is now freely available to users of Meta platforms such as Facebook and Instagram. Meta AI is powered by Meta’s large language model, Llama 3. Meta’s performance benchmarks for their new LLM show it outperforming Google’s Gemini and Anthropic’s Claude 3, though direct comparisons with OpenAI’s GPT-4.5 are conspicuously absent.

Like other generative chatbots, Meta AI is promoted as a virtual assistant, and can be engaged with using natural language chat prompts. The unique angle that Meta AI offers is that it is directly integrated into Meta’s existing social media platforms. Instagram users don’t need to access a separate application to interact with Meta AI, but can chat with it directly within the Instagram app interface. Meta AI also has its own dedicated website that can be used without an account, though some features (such as the ability to generate images) require users to log in through their Facebook account.

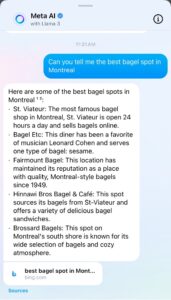

As a virtual assistant, Meta AI can do everything from providing recipes to searching for upcoming events. It can also be tagged into existing chat threads between two users to help answer questions or find information. In this way, Meta AI might be seen as a replacement for search engines, as it can provide its users with personalized responses to a wide range of queries without making them leave the app—though its generated results often rely on and directly link to Google or Bing search engine results pages.

If Meta’s virtual assistant catches on, it could lead to users spending more time on Meta-owned platforms, and less time on competing search engines like Bing and Google. For marketers, this presents some interesting opportunities. For instance, search engine optimization efforts may be more effective if they’re aligned with the kinds of long-tail keywords and natural language phrases that generative AI chatbots tend to favor.

One of the best ways for marketing educators to familiarize their students with Meta AI is to have them experiment with the platform. Students can be tasked with using Meta AI to complete a range of research-based tasks, from summarizing complex topics to finding recommendations for restaurants in their area. Through this kind of hands-on experience, students will gain a better understanding of which tasks Meta AI is well-suited for, as well as some of the current limitations of the virtual assistant.

Grok

Developed by Elon Musk’s xAI, Grok is an AI-powered virtual assistant that is integrated with X (the social media platform formerly known as Twitter). Grok is powered by Grok-1.5, the latest version of xAI’s large language model. Grok-1.5’s performance benchmarks reveal a particularly high score on HumanEval, which is a metric used to evaluate an LLM’s ability to generate code to solve problems.

In contrast to Meta AI, Grok is presented as a premium service available only to subscribers to X Premium, X’s paid subscription tier. In this sense, Grok is positioned similarly to other paid AI chatbots, such as ChatGPT Plus, with the added benefit of direct integration with a social media platform. While Grok is still in a relatively early stage of development, its benchmarks show that its underlying LLM is already fairly sophisticated, making it a strong option for people looking to pay for a premium virtual assistant.

While most of Grok’s features are reserved for paying subscribers, X is beginning to test a feature that uses AI to summarize trending topics on the platform. In theory, this is a compelling application of generative AI technology, as it leverages Grok’s ability to parse the huge volume of data generated by X’s users into useful summaries, similar to a social listening tool.

In practice, though, these AI-generated summaries have proved difficult to reliably generate. As with other LLMs, Grok-1.5 appears to sometimes struggle with context and word-sense disambiguation. As a result, several of the trending news stories it generated were based on misunderstandings of X users’ posts. For instance, Grok recently generated a story with the headline “Klay Thompson Accused in Bizarre Brick-Vandalism Spree,” with a brief article stating that Thompson, the shooting guard for the Golden State Warriors, was “accused of vandalizing multiple houses with bricks in Sacramento” and is currently under investigation by the police. This story was based on the LLM’s misunderstanding of the phrase “throwing bricks” in the context of basketball, and demonstrates the current limitations of AI-generated news summaries.

For marketing educators, Grok’s confused news summary is an opportunity to consider some of the limits of generative AI. While large language models have made significant strides in recent months, there are still notable blind-spots in their ability to parse the meaning of human writing. This isn’t only a problem for Grok. Many marketers work with social listening tools that rely of similar LLMs with similar limitations. Students can be tasked with finding additional examples of the kinds of problems that can arise from word-sense disambiguation, as well as other context-dependant errors like parsing jokes and sarcasm. Students can be engaged in a discussion of how these kinds of limitations can be managed through human oversight and review.

To learn more about how artificial intelligence is impacting the world of digital marketing, check out Mujo’s Artificial Intelligence Marketing textbook for higher education and Foundations of Artificial Intelligence textbook for high schools.

by

by